|

|

- Search

| Psychiatry Investig > Volume 10(2); 2013 > Article |

Abstract

Objective

In recent years there has been an enormous increase of neuroscience research using the facial expressions of emotion. This has led to a need for ethnically specific facial expressions data, due to differences of facial emotion processing among different ethnicities.

Methods

Fifty professional actors were asked to pose with each of the following facial expressions in turn: happiness, sadness, fear, anger, disgust, surprise, and neutral. A total of 283 facial pictures of 40 actors were selected to be included in the validation study. Facial expression emotion identification was performed in a validation study by 104 healthy raters who provided emotion labeling, valence ratings, and arousal ratings.

Results

A total of 259 images of 37 actors were selected for inclusion in the Extended ChaeLee Korean Facial Expressions of Emotions tool, based on the analysis of results. In these images, the actors' mean age was 38┬▒11.1 years (range 26-60 years), with 16 (43.2%) males and 21 (56.8%) females. The consistency varied by emotion type, showing the highest for happiness (95.5%) and the lowest for fear (49.0%). The mean scores for the valence ratings ranged from 4.0 (happiness) to 1.9 (sadness, anger, and disgust). The mean scores for the arousal ratings ranged from 3.7 (anger and fear) to 2.5 (neutral).

Conclusion

We obtained facial expressions from individuals of Korean ethnicity and performed a study to validate them. Our results provide a tool for the affective neurosciences which could be used for the investigation of mechanisms of emotion processing in healthy individuals as well as in patients with various psychiatric disorders.

Recent neuroscience research has investigated the mechanisms and neural bases of emotion processing. In these experimental studies, images of facial expressions pertaining to various specific emotions have often been used, because facial expressions are one of the most powerful means of communication between human beings.1 The importance of facial expressions in social interaction and social intelligence is widely recognized in anthropology and psychology.

In 1978, Ekman and Friesen2 developed images of 110 facial expressions of emotions that included Caucasians and African Americans of various ages. Following this, Matsumoto and Ekman3 developed the Japanese and Caucasian Facial Expressions of Emotion (JACFEE) instrument, whose reliability has been demonstrated.4 Additionally, Gur et al.5 developed and validated a set of three-dimensional color facial images expressing five emotions.

To date, facial data developed for the affective neuroscience studies have typically been restricted in ethnicity and age range. Although substantial research has documented the universality of some basic emotional expressions,6,7 recent findings have demonstrated cultural differences in levels of recognition and ratings of intensity.8-10 Further, neural responses to emotions processing have been suggested to be different among different ethnicities.11,12 These reports suggest that appropriate facial emotional data are needed for each ethnic group.

Our group in Korea published the standardized ChaeLee Korean Facial Expressions of Emotions tool that consists of 44 color facial pictures of 6 professional actors.13 Subsequently, other groups of researchers have developed sets of Korean facial emotional expressions. These include Lee et al.'s14 set of 6125 expressions in the Korea University Facial Expression Collection (KUFEC) that used 49 amateur actors (25 females and 24 males, age range 20-35 years). These pictures were taken from three angles (45┬░, 0┬░, -45┬░), and the subject gazed in five directions (straight, left, right, upward, and downward). However, the validity data of the raters were not published, and the ages of the performers were all relatively young. Recently, Park et al.,15 reported 176 expressions in their Korean Facial Expressions of Emotion (KOFEE) tool that used 15 performers (7 males, 8 females) and showed at least 50% of consistency by the 105 raters. Again, the performers of the KOFEE were limited to young ages. Also, facial expressions were elicited by activating muscles related to each specific emotion.

In the present study, we report the development of the extended ChaeLee Korean facial expressions of emotions and its validation study.

For this study, we trained 50 professional actors (25 males, 25 females) to appropriately express seven facial expressions: happiness, sadness, anger, surprise, disgust, fear, and neutral. All participants joined the study voluntarily after being fully informed of its purpose and procedure, and all of them signed a written informed consent to our use of their portraits. This study was approved by the St. Mary's Hospital, The Catholic University of Korea, Institutional Review Board.

The facial expressions were recorded by a high-definition camcorder (TRV-940, Sony, Japan). Eight well-trained medical college students (4 males, 4 females; mean age 23.4┬▒1.4 years) reviewed the video clips and extracted frames of facial expressions that portrayed the intended emotions. Confusing or possibly misleading facial expressions were not included. The entire procedure was repeated for all facial images until a consensus of researchers and students was reached. Finally, 283 images from 40 actors were selected for the study. Remarkable characteristics of the facial images such as blemishes and moles were removed, and other properties of the images such as background, eye position, and facial brightness were adjusted to make them uniform.

One hundred and four subjects were recruited in the present study who had no past history or current diagnosis of psychiatric disorder, no medical disorder possibly affecting brain function, and who had not taken any drugs influencing motor function. Subjects who scored above the cutoff scores on the Beck Depression Inventory (BDI) or on the Spielberger's State Anxiety Inventory (SAI) were excluded from participation in the validation study. The cutoff scores for the BDI were 23 for male and 24 for female participants, and it was 61 for the SAI for both sexes.16,17

All subjects participated voluntarily, with the objective and procedures of the experiment thoroughly explained to them prior to the study. All who agreed to participate signed an informed consent and were paid for their participation.

Prior to the main session, the subjects had practice sessions with 7-14 stimuli selected from the ChaeLee Korean Facial Expressions of Emotion images which were validated in our previous study.13 Then, in the main session, a randomly selected image of facial expression (720├Ś480 mm) was displayed on a screen for 5 seconds. Subjects were asked to select an emotion label for the facial expression and rate its valence and arousal as quickly as possible. We used a forced-choice method for emotion labeling in which the subject selected one emotion from the seven given choices (happiness, sadness, anger, surprise, disgust, fear, or neutral).

The valence and arousal were rated on a Likert scale from 1 to 5. For the valence rating, images that conveyed the most positive or appealing feeling corresponded to 5, and the most negative to 1. Similarly, for the arousal rating, participants were directed to give a rating of 5 to an image if they were greatly aroused by it, and 1 if they felt completely relaxed and calm. To lessen the fatigue effect, the images were divided equally into two runs with a 10-minute break between them. The tasks were done in a quiet environment so that the subjects would not be distracted. The facial stimuli were presented and responses were obtained using E-PRIME v1.1 (Psychology Software Tools, Pittsburgh, PA, USA).

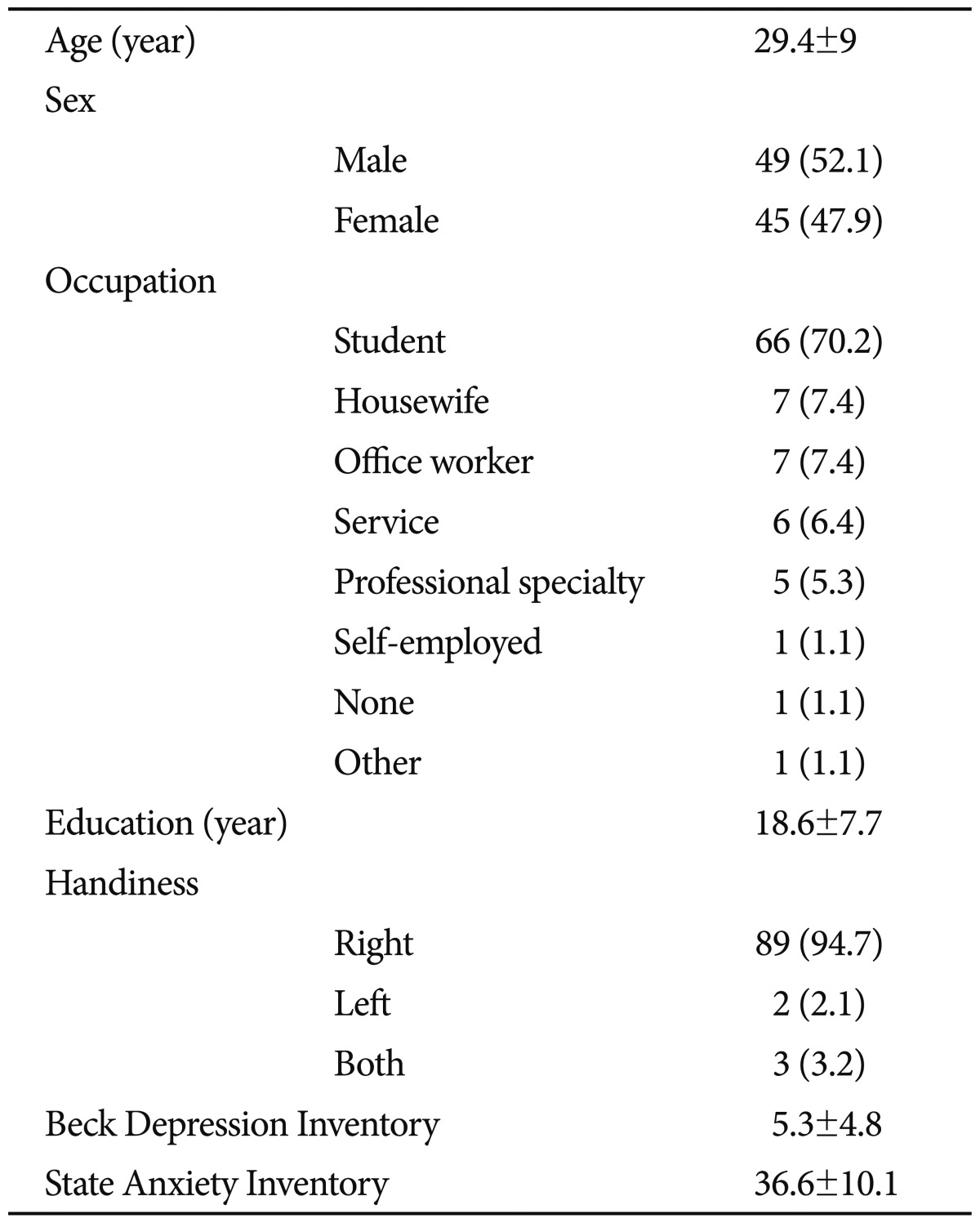

The demographic data for participants in the validation study were summarized as "mean┬▒standard deviation" or n (%) depending on their type.

The consistency of labeling for each facial expression was estimated by computing the percentage of each emotion answered as intended. The valence and arousal ratings were summarized as mean┬▒standard deviation. In order to obtain differences of valence and arousal among emotion types, one-way ANOVA analysis and post-hoc analysis were conducted. All analysis was conducted using SAS/PC version 9.2 (SAS Institute Inc., Cary, NC, USA).

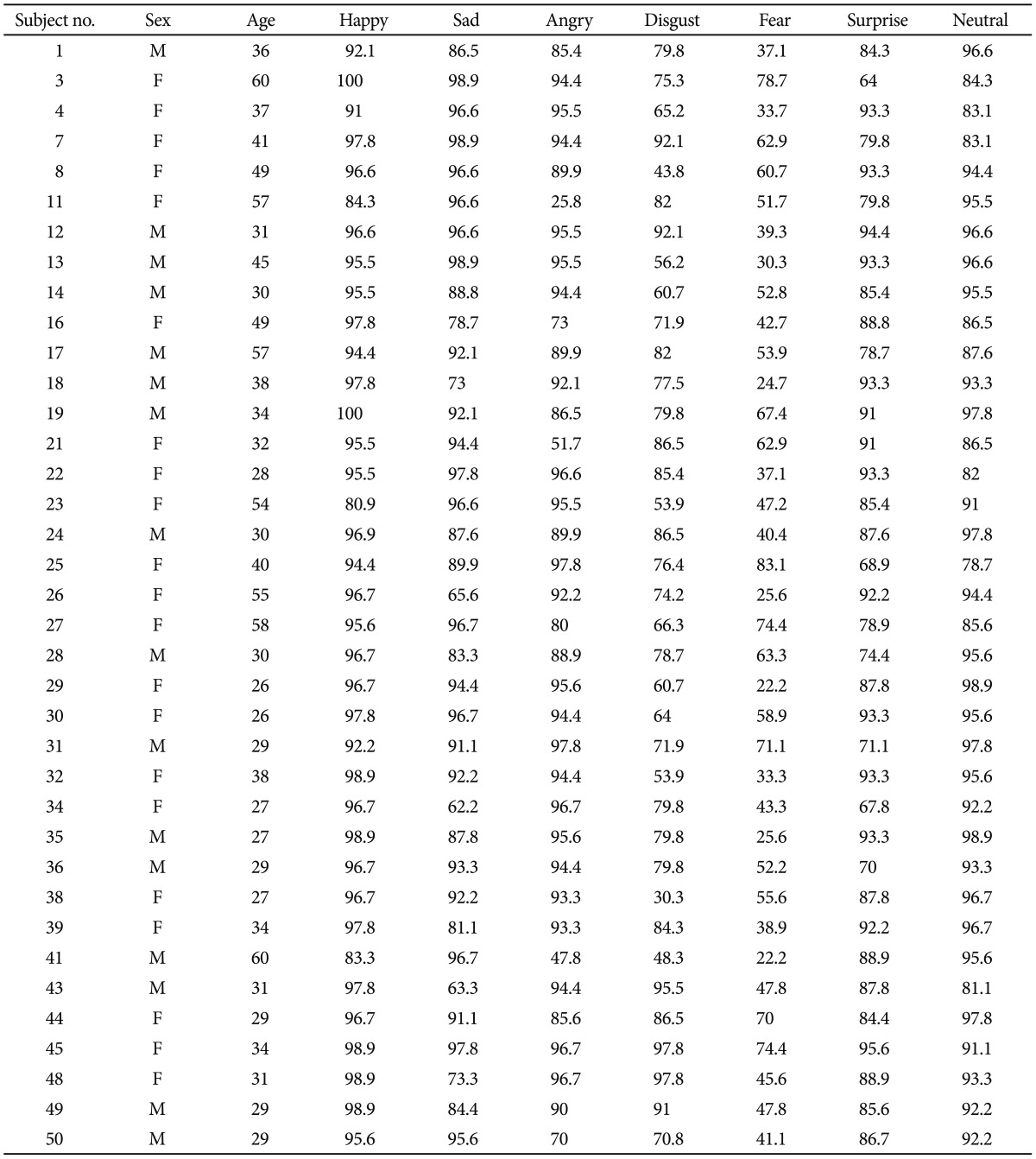

Data from 94 subjects were included in the validation study analysis, after exclusion of 8 subjects with missing data due to technical problems of the computerized emotion identification program. The average age of subjects was 29.4┬▒9 years, 49 (52.1%) were males, and 45 (47.9%) were females. Regarding occupations, students were the majority of the subjects at 70.2%, followed by office employees (7.4%), housewives (7.4%), service workers (6.4%), and professionals (5.3%). The average number of years spent in education was 18.6┬▒7.7, and 94.7% of subjects were right-handed. The participants were within the normal ranges of depression and anxiety scores (Table 1).

Based on the validation study results, we made a final selection of 259 pictures of 37 actors for inclusion in the Extended ChaeLee Korean Facial Expressions of Emotion tool, after excluding 3 actors' pictures due to low ratings consistency (Figure 1). The average age of the actors whose facial images were ultimately selected was 38┬▒11.1 years (range 26-60 years), with 11 people in their 20's (29.7%), 14 in their 30's (37.8%), 5 in their 40's (13.5%), 5 in their 50's (13.5%), and 2 in their 60's (5.4%). The numbers of female and male participants were 20 (52.6%) and 18 (47.4%), respectively (Figure 2).

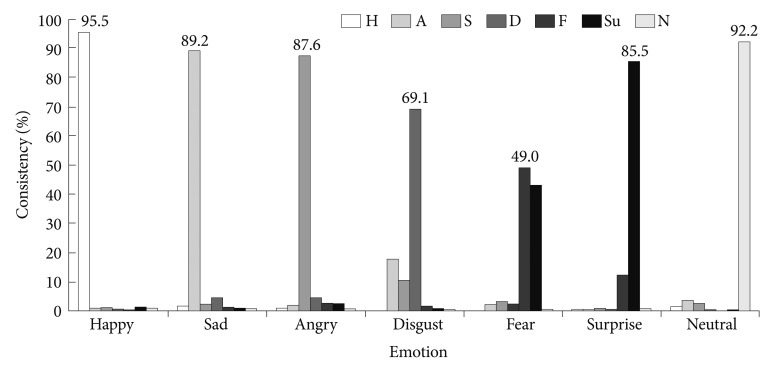

Judgments of the emotion for each facial expression image are summarized in Table 2. The average consistency, i.e., the mean percent of people who recognized a facial expression as the intended emotion, was 95.5% (80.9-100%) for happiness, 89.2% (62.2-98.9%) for sadness, 87.6% (25.8-97.8%) for anger, 85.5% (64.0-95.6%) for surprise, 69.1% (21.1-92.1%) for disgust, 49.0% (22.2-83.1%) for fear, and 92.2% (78.7-98.9%) for neutral facial expression. Consistency for fearful expressions was the lowest among the emotions. A confusion matrix of the facial expressions showed that fear was most often confused with surprise (43.1%). Also, disgust facial expressions were sometimes confused with happiness, anger, or other emotions (Figure 3).

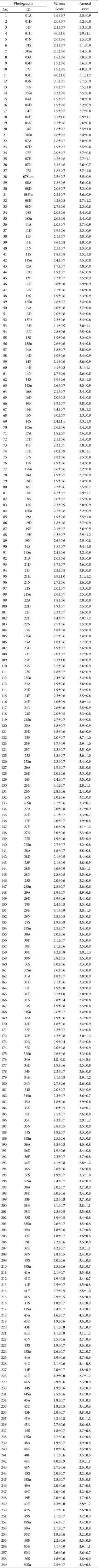

The mean valence and arousal ratings for the facial expressions are summarized in Table 3. The mean valence ratings were 4.0┬▒0.2 (3.3-4.4) for happy facial expressions, 2.6┬▒0.1 (2.3-2.8) for surprise, 2.1┬▒0.2 (1.9-2.5) for fear, 1.9┬▒0.2 (1.8-2.4) for sadness, 1.9┬▒0.1 (1.6-2.1) for anger, 1.9┬▒0.1 (1.7-2.1) for disgust, and 2.7┬▒0.1 (2.5-3.1) for neutral. ANOVA and post-hoc analysis categorized 4 groups from positive to negative: happiness, surprise and neutral, fear, and others (sad, angry and disgust)(F=372.261, p<0.001).

The mean arousal ratings were 3.7┬▒0.2 (3.3-4.3) for anger, 3.7┬▒0.1 (3.3-4.0) for fear, 3.4┬▒0.2 (3.0-3.7) for sadness, 3.4┬▒0.1 (3.2-3.7) for disgust, 3.4┬▒0.1 (3.2-3.6) for surprise, 2.9┬▒0.1 (2.7-3.1) for happiness, and 2.5┬▒0.1 (2.3-2.7) for neutral. ANOVA and post-hoc analysis revealed 4 groups from highest to lowest arousal rating: anger and fear, sadness and disgust, surprise and happiness, and neutral (54.227, p<0.001).

In the present study, the authors obtained a set of facial emotional expressions to create the Extended ChaeLee Korean Facial Expressions of Emotions tool (ChaeLee-E), composed of images of 37 actors of a wide age range (26-60 years). About 40% of the actors were in their thirties, 5 were in their 50's, and 2 were in their 60's. To our knowledge, the ChaeLee-E is the first to include Korean facial expression images for a wide range of ages. Previous neuroscience studies have used facial expressions only of young actors, yet previous findings have suggested an aging effect on facial emotion recognition.18-20 However, no data have been available about the emotion recognition of older people when they see facial expressions of younger people or people their own age, even though this is an interesting research topic. Using the ChaeLee-E could foster the examination of the interaction effects of age in the images with age in the observers.

For the validation study, 94 healthy subjects approximately evenly distributed in sex provided data for analysis. The average consistency for each emotion was similar to that in our previous study13 and in other studies.5,15 Specifically, happiness showed the highest consistency, and fear and disgust showed the lowest. Previous studies have consistently reported the finding that happy expressions are the most accurately recognized of all the emotions.5 This may be because happiness was the only positive emotion in the study, and all the others presented were negative emotions. Also, according to Ekman and Friesen,2 the happiness expression is produced by using only the zygomatic major muscle while other negative emotions are produced by combinations of overlapping facial muscles, which leads to difficulty in differentiating among negative emotions.

Following happiness, the consistency for sadness was the next highest among the emotional expressions (89.1%). Shioiri et al.21 suggested that sadness may draw sympathetic responses from others, while other negative emotions such as anger, disgust, and fear seem to elicit negative responses from observers. This may help to explain why sadness had more consistent recognition than the other negative emotions.

The consistency ratings for disgust and fear were the lowest among the emotional expressions, showing a wide variation in labeling. This may be because emotion judgments might be affected by the degree of complexity of the facial components involved in the expressions. As compared to happy emotion, fear expression is complex, given the number of muscles innervated.4 Also, previous studies that showed low recognition rate for negative emotions such as fear, anger and disgust in Japanese and Chinese population might suggest the presence of similar cultural influence on the recognition of facial expressions in Korean population.22,23 The confusion matrix of the facial expressions shows that fear was most often confused with surprise (43.2%). Also, the disgust facial expressions were sometimes confused with happiness, anger, or other emotions (Figure 3).

In addition to labeling discrete emotions for each facial expression, we also measured how the participants perceived the internal state of the actors in terms of the broad bipolar dimensions of valence and arousal. Regarding the valence of the facial expressions, positive pictures were rated as positive, and negative pictures were rated as negative, while neutral pictures were rated as a little negative. Surprise facial expressions were rated as having valences similar to those of neutral expressions. Sad, angry, and disgust facial expressions were most negatively perceived by participants. These findings are consistent with previous research that differentiated the valence of facial expressions as positive, neutral, and negative (sad, anger, and fear were seen as having negative valence).24

Regarding the arousal ratings, the highest arousals were for fear and anger, while the lowest were for neutral, with sadness, disgust, surprise, and happiness falling between them.

A previous study showed that fear and anger were highly arousing emotions, as evidenced by the degree of heart rate increase.25 Also, earlier work has shown that fear is a negatively valenced, highly activating emotion.26

In conclusion, the authors were able to obtain high quality standardized Korean facial expressions of emotions. This set of Korean facial expressions can be used as a tool for the affective neurosciences and for cultural psychiatry, and it thus contributes to the investigation of mechanisms of emotion processing in healthy individuals as well as patients with various psychiatric disorders.

Acknowledgments

This study was supported by a grant of the Korean Research Foundation (2006-2005152).

The following medical students significantly contributed to the extraction and selection of facial expressions: Seung-kyu Choi, Seok HwangBo, HaeWon Kim, Hyein Kim, Jieun Kim, Byung-hoon Park, Sangeun Yang, and SeokJae Yoon.

Also, the authors would like to express special thanks to Jihyun Moon for the contribution of image processing of photographs.

References

1. Carton JS, Kessler EA, Pape CL. Nonverbal decoding skills and relationship well-being in adults. J Nonverbal Behav 1999;23:91-100.

2. Ekman P, Friesen W. Facial Action Coding System. Palo Alto, CA: Consulting Psychologists Press; 1978.

3. Matsumoto D, Ekman P. Japanese and Caucasian Facial Expressions of Emotion (JACFEE). [Slides]. San Francisco, CA: San Francisco State University, Department of Psychology, Intercultural and Emotion Research Laboratory; 1988.

4. Biehl M, Matsumoto D, Ekman P, Hearn V, Heider K, Kudoh T, et al. Matsumoto and Ekman's Japanese and Caucasian Facial Expressions of Emotion (JACFEE): reliability data and cross-national differences. J Nonverbal Behav 1997;21:3-21.

5. Gur RC, Sara R, Hagendoorn M, Marom O, Hughett P, Macy L, et al. A method for obtaining 3-dimensional facial expressions and its standardization for use in neurocognitive studies. J Neurosci Methods 2002;115:137-143. PMID: 11992665.

6. Ekman P. Strong evidence for universals in facial expressions: a reply to Russell's mistaken critique. Psychol Bull 1994;115:268-287. PMID: 8165272.

7. Izard CE. Innate and universal facial expressions: evidence from developmental and cross-cultural research. Psychol Bull 1994;115:288-299. PMID: 8165273.

8. Russell JA. Is there universal recognition of emotion from facial expression? A review of the cross-cultural studies. Psychol Bull 1994;115:102-141. PMID: 8202574.

9. Gosselin P, Larocque C. Facial morphology and children's categorization of facial expressions of emotions: a comparison between Asian and Caucasian faces. J Genet Psychol 2000;161:346-358. PMID: 10971913.

10. Elfenbein HA, Ambady N. When familiarity breeds accuracy: cultural exposure and facial emotion recognition. J Pers Soc Psychol 2003;85:276-290. PMID: 12916570.

11. Golby AJ, Gabrieli JD, Chiao JY, Eberhardt JL. Differential responses in the fusiform region to same-race and other-race faces. Nat Neurosci 2001;4:845-850. PMID: 11477432.

12. Lee KU, Khang HS, Kim KT, Kim YJ, Kweon YS, Shin YW, et al. Distinct processing of facial emotion of own-race versus other-race. Neuroreport 2008;19:1021-1025. PMID: 18580572.

13. Lee WH, Chae JH, Bahk WM, Lee KU. Development and its preliminary standardization of pictures of facial expressions for affective neurosciences. J Korean Neuropsychiatr Assoc 2004;43:552-558.

14. Lee TH, Lee KY, Lee K, Choi JS, Kim HT. The Korea University Facial Expression Collection: KUFEC. Lab. Seoul, Korea: Lab. of Behavioral Neuroscience, Dept. of Psychology; 2006.

15. Park JY, Oh JM, Kim SY, Lee MK, Lee CR, Kim BR, et al. Korean Facial Expressions of Emotion (KOFEE). Seoul, Korea: Section of Affect & Neuroscience, Institute of Behavioral Science in Medicine, Yonsei University College of Medicine; 2011.

16. Rhee MK, Lee YH, Park SH, Sohn CH, Chung YC, Hong SK, et al. A standardization study of Beck Depression Inventory I - Korean Version (K-BDI): reliability and factor analysis. Korean J Psychopathol 1995;4:77-95.

17. Kim JT. Relationship between Trait Anxiety and Sociality. Seoul: Korea University Medical College; 1978,Postgraduate Dissertation.

18. Brosgole L, Weisman J. Mood recognition across the ages. Int J Neurosci 1995;82:169-189. PMID: 7558648.

19. Mill A, Allik J, Realo A, Valk R. Age-related differences in emotion recognition ability: a cross-sectional study. Emotion 2009;9:619-630. PMID: 19803584.

20. Ruffman T, Henry JD, Livingstone V, Phillips LH. A meta-analytic review of emotion recognition and aging: implications for neuropsychological models of aging. Neurosci Biobehav Rev 2008;32:863-881. PMID: 18276008.

21. Shioiri T, Someya T, Helmeste D, Tang SW. Misinterpretation of facial expression: a cross-cultural study. Psychiatry Clin Neurosci 1999;53:45-50. PMID: 10201283.

22. Shioiri T, Someya T, Helmeste D, Tang SW. Cultural difference in recognition of facial emotional expression: contrast between Japanese and American raters. Psychiatry Clin Neurosci 1999;53:629-633. PMID: 10687742.

23. Huang Y, Tang S, Helmeste D, Shioiri T, Someya T. Differential judgement of static facial expressions of emotions in three cultures. Psychiatry Clin Neurosci 2001;55:479-483. PMID: 11555343.

24. Britton JC, Taylor SF, Sudheimer KD, Liberzon I. Facial expressions and complex IAPS pictures: common and differential networks. Neuroimage 2006;31:906-919. PMID: 16488159.

25. Ekman P, Levenson RW, Friesen WV. Autonomic nervous system activity distinguishes among emotions. Science 1983;221:1208-1210. PMID: 6612338.

26. Faith M, Thayer JF. A dynamical systems interpretation of a dimensional model of emotion. Scand J Psychol 2001;42:121-133. PMID: 11321635.

Figure┬Ā2

Age and sex distribution of actors in the final selections for the Extended ChaeLee Korean Facial Expressions of Emotions.

Figure┬Ā3

Confusion matrix of the Extended ChaeLee Korean Facial Expressions of Emotions according to each emotion type. H: happiness, A: anger, S: sadness, D: disgust, F: fear, Su: surprise, N: neutral.