1. Rubin IL, Crocker AC. Developmental disabilities: delivery of medical care for children and adults. Philadelphia: Lea & Febiger; 1989.

5. Cakir J, Frye RE, Walker SJ. The lifetime social cost of autism: 1990-2029. Res Autism Spectr Disord 2020;72:101502

6. Genereaux D, van Karnebeek CD, Birch PH. Costs of caring for children with an intellectual developmental disorder. Disabil Health J 2015;8:646-651.

8. Kakchapati S, Pratap KCS, Giri S, Sharma S. Factors associated with early child development in Nepal - a further analysis of multiple indicator cluster survey 2019. Asian J Soc Health Behav 2023;6:21-29.

9. McKenzie K, Megson P. Screening for intellectual disability in children: a review of the literature. J Appl Res Intellect Disabil 2012;25:80-87.

10. von Suchodoletz W. [Early identification of children with developmental language disorders - when and how?]. Z Kinder Jugendpsychiatr Psychother 2011;39:377-385. German.

12. Lee KY, Chen CY, Chen JK, Liu CC, Chang KC, Fung XCC, et al. Exploring mediational roles for self-stigma in associations between types of problematic use of internet and psychological distress in youth with ADHD. Res Dev Disabil 2023;133:104410

13. Chan Y, Chan YY, Cheng SL, Chow MY, Tsang YW, Lee C, et al. Investigating quality of life and self-stigma in Hong Kong children with specific learning disabilities. Res Dev Disabil 2017;68:131-139.

16. McCarty P, Frye RE. Early detection and diagnosis of autism spectrum disorder: why is it so difficult? Semin Pediatr Neurol 2020;35:100831

17. Tippelt S, K├╝hn P, Grossheinrich N, von Suchodoletz W. [Diagnostic accuracy of language tests and parent rating for identifying language disorders]. Laryngorhinootologie 2011;90:421-427. German.

24. Deutsch SI, Raffaele CT. Understanding facial expressivity in autism spectrum disorder: an inside out review of the biological basis and clinical implications. Prog Neuropsychopharmacol Biol Psychiatry 2019;88:401-417.

26. Zaja RH, Rojahn J. Facial emotion recognition in intellectual disabilities. Curr Opin Psychiatry 2008;21:441-444.

29. Scotland JL, Cossar J, McKenzie K. The ability of adults with an intellectual disability to recognise facial expressions of emotion in comparison with typically developing individuals: a systematic review. Res Dev Disabil 2015;41-42:22-39.

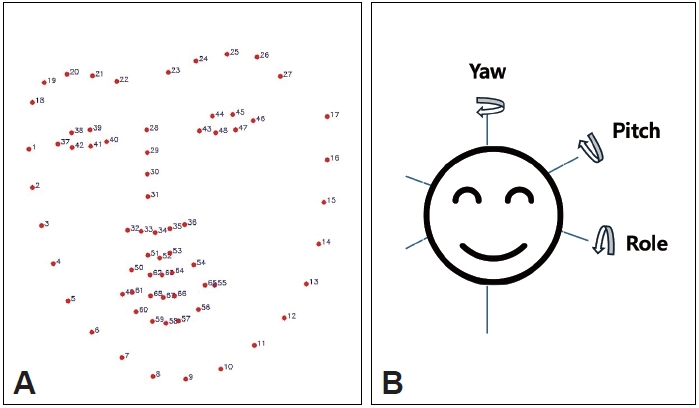

30. Happy SL, Routray A. Automatic facial expression recognition using features of salient facial patches. IEEE Trans Affect Comput 2015;6:1-12.

32. Bulat A, Tzimiropoulos G. How far are we from solving the 2D & 3D face alignment problem? (and a dataset of 230,000 3D facial landmarks). Proceedings of the IEEE International Conference on Computer Vision (ICCV); 2017 Oct 22-29; Venice, Italy. New York: IEEE, 1993, p.1021-1030.

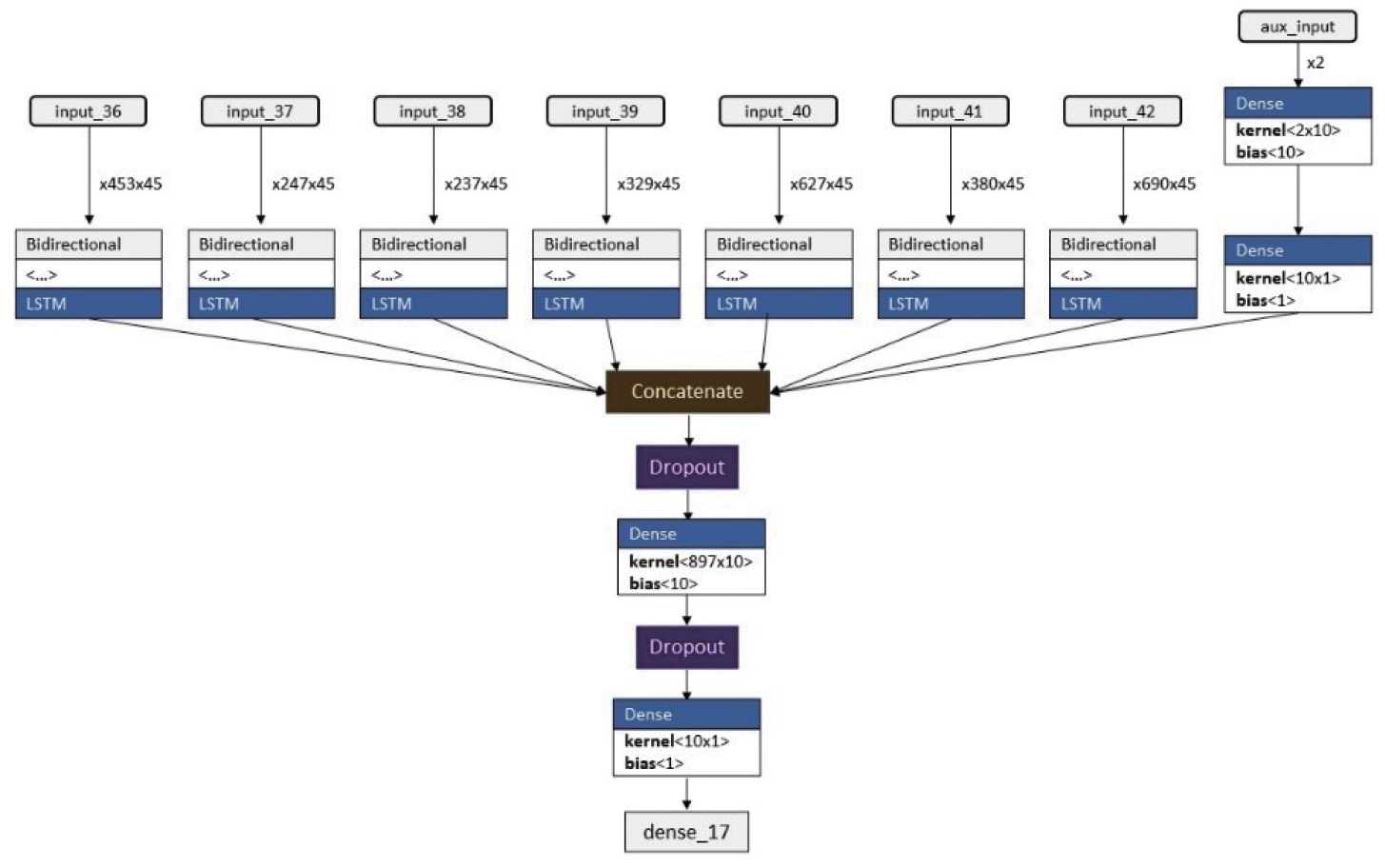

33. Kwon DH, Kim JB, Heo JS, Kim CM, Han YH. Time series classification of cryptocurrency price trend based on a recurrent LSTM neural network. J Inf Process Syst 2019;15:694-706.

37. Juneja M, Sharma S, Mukherjee SB. Sensitivity of the autism behavior checklist in Indian autistic children. J Dev Behav Pediatr 2010;31:48-49.

38. Hamilton S. Screening for developmental delay: reliable, easy-to-use tools. J Fam Pract 2006;55:415-422.

44. Maleka BK, Van Der Linde J, Glascoe FP, Swanepoel W. Developmental screeningŌĆöevaluation of an m-Health version of the parents evaluation developmental status tools. Telemed J E Health 2016;22:1013-1018.

48. Jaiswal S, Valstar MF, Gillott A, Daley D. Automatic detection of ADHD and ASD from expressive behaviour in RGBD data. Proceedings of the 12th IEEE International Conference on Automatic Face & Gesture Recognition (FG 2017); 2017 May 30-June 3; Washington, DC, USA. New York: IEEE, 2017, p.762-769.

50. Mottron L, Mineau S, Martel G, Bernier CS, Berthiaume C, Dawson M, et al. Lateral glances toward moving stimuli among young children with autism: early regulation of locally oriented perception? Dev Psychopathol 2007;19:23-36.

51. Hellendoorn A, Langstraat I, Wijnroks L, Buitelaar JK, van Daalen E, Leseman PP. The relationship between atypical visual processing and social skills in young children with autism. Res Dev Disabil 2014;35:423-428.